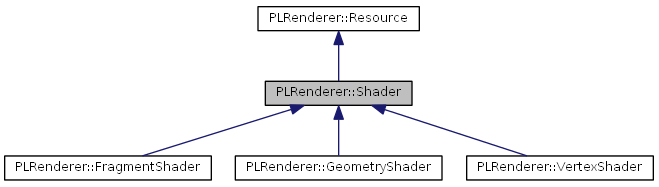

Abstract renderer shader resource. More...

#include <Shader.h>

Public Member Functions | |

| virtual PLRENDERER_API | ~Shader () |

| Destructor. | |

| virtual PLCore::String | GetShaderLanguage () const =0 |

| Returns the name of the shader language the shader is using. | |

| virtual PLCore::String | GetSourceCode () const =0 |

| Returns the shader source code. | |

| virtual PLCore::String | GetProfile () const =0 |

| Returns the name of the shader profile the shader is using. | |

| virtual PLCore::String | GetEntry () const =0 |

| Returns the name of the shader entry point the shader is using. | |

| virtual bool | SetSourceCode (const PLCore::String &sSourceCode, const PLCore::String &sProfile="", const PLCore::String &sEntry="")=0 |

| Sets the shader source code. | |

Static Public Member Functions | |

| static PLRENDERER_API PLCore::String | RemovePrecisionQualifiersFromGLSL (const PLCore::String &sSourceCode) |

| Removes precision qualifiers from the given GLSL shader source code. | |

Protected Member Functions | |

| PLRENDERER_API | Shader (Renderer &cRenderer, EType nType) |

| Constructor. | |

Detailed Description

Abstract renderer shader resource.

Constructor & Destructor Documentation

| virtual PLRENDERER_API PLRenderer::Shader::~Shader | ( | ) | [virtual] |

Destructor.

| PLRENDERER_API PLRenderer::Shader::Shader | ( | Renderer & | cRenderer, |

| EType | nType | ||

| ) | [protected] |

Constructor.

- Parameters:

-

[in] cRenderer Owner renderer [in] nType Resource type

Member Function Documentation

| static PLRENDERER_API PLCore::String PLRenderer::Shader::RemovePrecisionQualifiersFromGLSL | ( | const PLCore::String & | sSourceCode | ) | [static] |

Removes precision qualifiers from the given GLSL shader source code.

- Parameters:

-

[in] sSourceCode Shader source code, usually blank ASCII code

- Returns:

- The modified GLSL shader source code

- Remarks:

- From the "The OpenGL® Shading Language" specification "Language Version: 3.30, Document Revision: 6, 11-Mar-2010" "Precision qualifiers are added for code portability with OpenGL ES, not for functionality. They have the same syntax as in OpenGL ES, as described below, but they have no semantic meaning, which includes no effect on the precision used to store or operate on variables." Although the precision qualifiers "should" have no effect when not using OpenGL ES, we noticed that some NVIDIA GPU drivers produced compiler errors when using precision qualifiers. Due this buggy behavior, it's recommended to remove the precision qualifiers before passing on the shader source code to OpenGL.

| virtual PLCore::String PLRenderer::Shader::GetShaderLanguage | ( | ) | const [pure virtual] |

Returns the name of the shader language the shader is using.

- Returns:

- The name of the shader language the shader is using (for example "GLSL" or "Cg")

| virtual PLCore::String PLRenderer::Shader::GetSourceCode | ( | ) | const [pure virtual] |

Returns the shader source code.

- Returns:

- The shader source code

| virtual PLCore::String PLRenderer::Shader::GetProfile | ( | ) | const [pure virtual] |

Returns the name of the shader profile the shader is using.

- Returns:

- The name of the shader profile the shader is using (for example "arbvp1")

| virtual PLCore::String PLRenderer::Shader::GetEntry | ( | ) | const [pure virtual] |

Returns the name of the shader entry point the shader is using.

- Returns:

- The name of the shader entry point the shader is using (for example "main")

| virtual bool PLRenderer::Shader::SetSourceCode | ( | const PLCore::String & | sSourceCode, |

| const PLCore::String & | sProfile = "", |

||

| const PLCore::String & | sEntry = "" |

||

| ) | [pure virtual] |

Sets the shader source code.

- Parameters:

-

[in] sSourceCode Shader source code, usually blank ASCII code [in] sProfile Shader profile to use, if empty string, a default profile will be used which usually tries to use the best available profile that runs on most hardware [in] sEntry Entry point, if empty string, "main" is used as default

- Returns:

- 'true' if all went fine, else 'false'

- Remarks:

- 'sProfile' is not supported by each shader-API and is in general shader-API dependent. GLSL doesn't have such profiles, just something named "version" - one has to directly write into the shader. But even when this information is not used for compiling the GLSL shader, we highly recommend to provide GLSL version information in the form of e.g. "130" for OpenGL 3.0 shaders ("#version 130"). Within Cg, a basic vertex profile may be "arbvp1" and a basic fragment profile "arbfp1". Cg also provides GLSL profiles: "glslv" for vertex shader, "glslg" for geometry shader and "glslf" for fragment shader. Please note that the profile is just a hint, if necessary, the implementation is free to choose another profile. In general, be carefully when explicitly setting a profile - when using Cg, and one of the shaders, a GPU program is composed of, is a GLSL profile like "glslv", all other shaders must use GLSL profiles as well!

'sEntry' is not supported by each shader-API. GLSL doesn't have such an user defined entry point and the main function must always be "main". Cg supports entry points with names other than "main".

Look out! When working with shaders you have to be prepared that a shader may work on one system, but fails to even compile on another one. Sadly, even if there are e.g. official GLSL specifications, you CAN'T be sure that every GPU driver is implementing them in detail. Here are some pitfalls which already produced some headaches...

When using GLSL, don't forget to provide the #version directive! Quote from "The OpenGL® Shading Language - Language Version: 3.30 - Document Revision: 6 - 11-Mar-2010" Page 14 "Version 1.10 of the language does not require shaders to include this directive, and shaders that do not include a #version directive will be treated as targeting version 1.10." It looks like that AMD/ATI drivers ("AMD Catalyst™ 11.3") are NOT using just version 1.10 if there's no #version directive, but a higher version... so don't trust your GPU driver when your GLSL code, using modern language features, also works for you without #version directive, because it may not on other systems! OpenGL version and GLSL version can be a bit confusing, so here's a version table: GLSL #version OpenGL version Some comments 110 2.0 120 2.1 130 3.0 Precision qualifiers added "attribute" deprecated; linkage between a vertex shader and OpenGL for per-vertex data -> use "in" instead "varying"/"centroid varying" deprecated; linkage between a vertex shader and a fragment shader for interpolated data -> use "in"/"out" instead 140 3.1 150 3.2 330 3.3 400 4.0 410 4.1 #version must occur before any other statement in the program as stated within: "The OpenGL® Shading Language - Language Version: 3.30 - Document Revision: 6 - 11-Mar-2010" Page 15 "The #version directive must occur in a shader before anything else, except for comments and white space." ... sadly, this time NVIDIA (driver: "266.58 WHQL") is not implementing the specification in detail and while on AMD/ATI drivers ("AMD Catalyst™ 11.3") you get the error message "error(#105) #version must occur before any other statement in the program" when breaking specification, NVIDIA just accepts it without any error.

Enough on GLSL - now to Cg. Sadly, in general Cg is on AMD/ATI GPU's just poor due to the lack of modern profiles. When using Cg on none NVIDIA GPU's you have virtually no other change then using the GLSL profiles in order to write shaders using modern features. While the concept of Cg is fantastic, this lack of modern none NVIDIA profiles destroys many of Cg's advantages...

The documentation for this class was generated from the following file: